Technical SEO: Boost Rankings & User Experience

Technical SEO is the backbone of any successful search engine optimization strategy. While on-page and off-page SEO focus on content and backlinks, technical SEO ensures that your website is structured, accessible, and optimized for search engines to crawl and index effectively. Without a solid technical foundation, even the best content and links won’t deliver the results you’re aiming for. Think of it as the essential infrastructure of your website – the plumbing, wiring, and structural integrity that allows everything else to function effectively. Without a solid technical foundation, even the best content and strongest backlinks might fail to deliver their full potential in search engine rankings.

This guide is designed to demystify technical SEO. We’ll break down the core components, from crawlability and indexing to site speed and mobile-friendliness, and how to integrate these practices into your overall SEO strategy for long-term success and then explain why they matter, and provide actionable insights you can use to optimize your website’s technical health. Whether you’re a website owner, marketer, or aspiring SEO professional, understanding and implementing technical SEO best practices is crucial for improving your site’s visibility, driving organic traffic, and ultimately, enhancing user experience. Let’s dive into the engine room of SEO.

What is Technical SEO and Why Does It Matter?

Before we delve into the specifics, let’s establish a clear understanding of what technical SEO encompasses and why it demands your attention.

Defining Technical SEO

Technical SEO refers to the process of optimizing your website’s infrastructure so that search engine crawlers (like Googlebot) can effectively crawl, interpret, and index your content without issues. Unlike On-Page SEO (which focuses on content optimization like keywords and headings) or Off-Page SEO (which deals with external signals like backlinks and brand mentions), Technical SEO is purely concerned with the how of your website’s performance and accessibility from a search engine’s perspective. Key areas include site speed, mobile-friendliness, site architecture, security, structured data, and indexation management.

The Impact on Search Rankings

Search engines like Google aim to provide users with the best possible results for their queries. This means prioritizing websites that are not only relevant and authoritative but also accessible, fast, secure, and easy to use. If search engines encounter technical roadblocks – such as being unable to crawl certain pages, encountering slow load times, finding confusing site structures, or detecting security issues – they may struggle to index your content correctly or may rank it lower.

Technical issues like slow page speed, crawl errors, or improper indexing can severely hinder your site’s ability to rank. Search engines prioritize websites that are easy to crawl and provide a seamless user experience. If your site has technical flaws, search engines may struggle to index your content, leading to lower visibility in search engine results pages (SERPs). Hire SEO agency Malaysia if you struggle with some kind of problems there.

The Link Between Technical SEO and User Experience (UX)

Technical SEO and User Experience are intrinsically linked. Many technical optimization efforts directly translate into a better experience for your visitors. For example:

- Site Speed: Faster loading times reduce frustration and decrease bounce rates.

- Mobile-Friendliness: Ensures users on smartphones and tablets can navigate and consume content easily.

- Clear Site Architecture: Helps users find what they’re looking for quickly.

- HTTPS Security: Builds trust and assures users their connection is secure.

Google pays close attention to user engagement signals (like time on site, bounce rate, pogo-sticking). A poor technical setup often leads to poor UX, which can signal to Google that your site isn’t satisfying user intent, potentially harming your rankings. Optimizing technical aspects makes both search engines and users happy. A fast, secure, and well-structured site keeps users engaged, reduces bounce rates, and increases the likelihood of conversions.

Key Pillars of Technical SEO

Technical SEO covers a broad range of elements. Let’s break down the most crucial pillars you need to focus on.

Crawlability & Indexability: Making Your Site Discoverable

Before your site can rank, search engines need to find (crawl) and store (index) its content. Ensuring this process is smooth is fundamental.

Robots.txt

The robots.txt file is a simple text file located in your website’s root directory (e.g., https://thewebseo.com/robots.txt). It provides instructions to web crawlers about which pages or sections of your site they should not crawl.

- Purpose: Primarily used to prevent crawlers from accessing non-public areas (like admin logins), duplicate content pages (e.g., print versions), or resource-intensive scripts.

- Common Pitfalls: Accidentally blocking important CSS or JavaScript files (which can prevent Google from rendering pages correctly) or disallowing entire sections you do want indexed. Always double-check your robots.txt directives (Allow:, Disallow:).

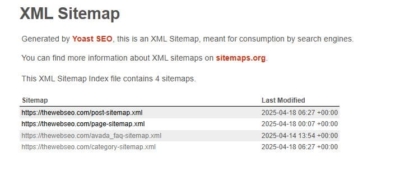

XML Sitemaps

XML Sitemap TheWebSEO

An XML sitemap is a file that lists the important URLs on your website that you want search engines to crawl and index.

- Purpose: Helps search engines discover all your relevant pages, especially new content or pages that might be harder to find through normal crawling (e.g., deep within the site architecture).

- Creation & Submission: Can often be generated automatically by CMS platforms (like WordPress via SEO plugins) or using online sitemap generators. Submit your sitemap URL via Google Search Console to ensure Google knows where to find it. Keep it updated as you add or remove content.

Crawl Budget

Crawl budget refers to the number of pages Googlebot can and wants to crawl on your website within a certain timeframe. While less of a concern for smaller websites, large sites (millions of pages) need to manage it efficiently.

- Factors: Site speed (faster sites can be crawled more), server errors (errors waste budget), number of URLs, and site popularity influence crawl budget.

- Optimization: Ensure fast load times, minimize redirect chains, fix broken links (404s), block unimportant URLs via robots.txt, and use XML sitemaps effectively.

Indexing Control

You need control over which pages get indexed.

- Meta Robots Tag (noindex): An HTML tag placed in the <head> section of a page telling search engines not to include that specific page in their index. Useful for thin content pages, thank-you pages, internal search results, or staging environments. Use it cautiously. (<meta name=”robots” content=”noindex”>)

- Canonical Tags (rel=”canonical”): An HTML tag used when you have multiple URLs with similar or identical content. It tells search engines which URL represents the master version (the “canonical” URL) that should be indexed and credited with ranking signals. Essential for managing duplicate content issues arising from URL parameters, print versions, or content syndication. (<link rel=”canonical” href=”https://www.yourdomain.com/preferred-page-url” />)

Handling Crawl Errors

Google Search Console’s “Coverage” report (under Indexing) highlights pages Google tried to crawl but encountered issues with.

- Common Errors: 404 Not Found (broken links), 5xx Server Errors (server issues), Soft 404s (pages that look like errors but return a 200 OK status).

- Importance: Regularly check for and fix these errors. 404s should ideally be 301 redirected to relevant live pages. Server errors need investigation with your hosting provider. Fixing errors improves crawl efficiency and user experience.

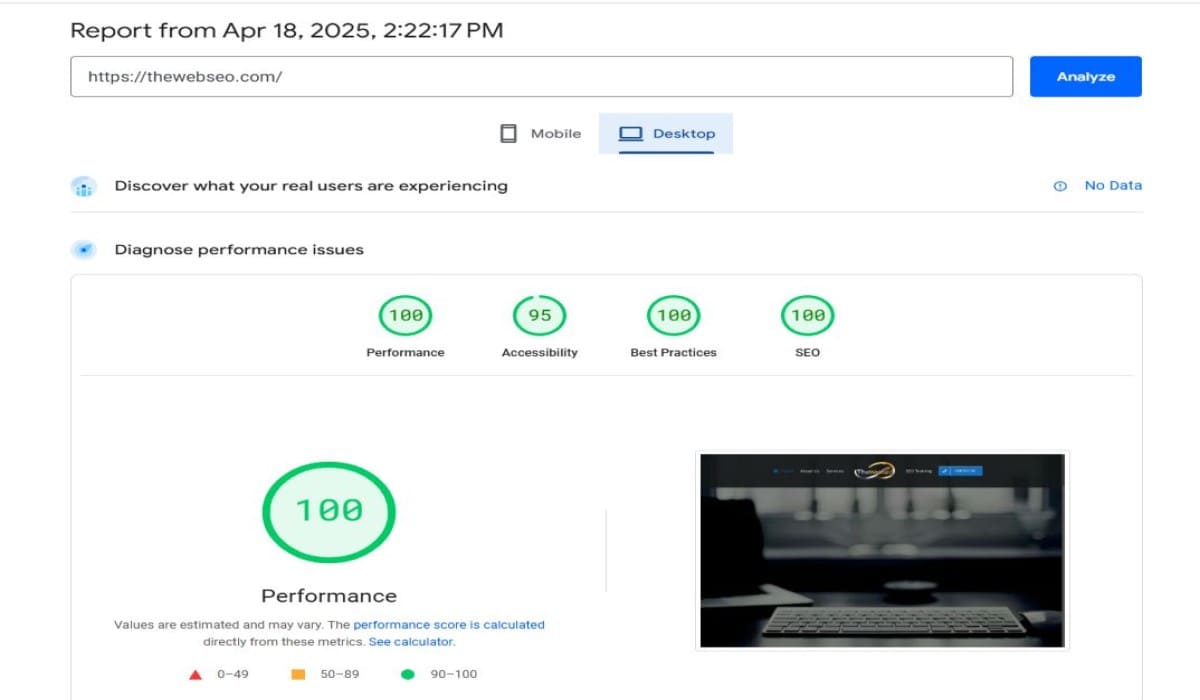

Website Speed & Performance: Meeting User Expectations

Page Speed Test Results

In today’s fast-paced digital world, website speed isn’t just a preference; it’s an expectation. Slow sites frustrate users and hinder rankings.

Why Page Speed is Critical

Page speed is a ranking factor and a critical component of user experience. Slow-loading pages frustrate users, increase bounce rates, and negatively impact conversions. Optimizing your site’s speed can lead to higher rankings and better engagement. Google recognizes this and uses page speed, particularly through Core Web Vitals, as a ranking factor for both mobile and desktop search results.

Understanding Core Web Vitals (LCP, FID/INP, CLS)

Google’s Core Web Vitals (CWV) are specific metrics measuring real-world user experience:

- Largest Contentful Paint (LCP): Measures loading performance – how quickly the largest visible content element on the page loads. Aim for under 2.5 seconds.

- First Input Delay (FID) / Interaction to Next Paint (INP): Measures interactivity – how quickly the page responds to a user’s first interaction (like clicking a button). FID is being replaced by INP, which considers overall responsiveness. Aim for low values (under 100ms for FID, under 200ms for INP).

- Cumulative Layout Shift (CLS): Measures visual stability – how much unexpected layout shifts occur during loading. Aim for a score under 0.1.

Tools for Measurement

Pages Speed Test Tools

Use these tools to analyze your site’s speed and Core Web Vitals:

- Google PageSpeed Insights: Provides lab and field data (real user data, if available) for CWV and offers optimization suggestions.

- GTmetrix: Offers detailed performance reports, waterfall charts, and tracks performance over time.

- WebPageTest: Allows advanced testing from different locations and connection speeds.

Common Optimization Techniques

- Image Optimization: Compress images (using tools like TinyPNG or image software), use modern formats like WebP, and serve appropriately sized images (don’t upload massive images if they’re displayed small).

- Browser Caching: Instructs repeat visitors’ browsers to store static resources (like logos, CSS) locally, so they don’t need to be re-downloaded on subsequent visits.

- Minifying CSS, JavaScript, HTML: Removes unnecessary characters (whitespace, comments) from code files to reduce their size.

- Reducing Server Response Time: Optimize your server (database queries, server-side code) and choose high-quality web hosting.

- Using Content Delivery Networks (CDNs): Distributes your website’s static assets across multiple servers globally, delivering content from the server closest to the user, reducing latency.

Mobile-Friendliness: Catering to the Mobile Majority

With more searches happening on mobile devices than desktops, having a mobile-friendly website is non-negotiable.

Mobile-First Indexing Explained

Google predominantly uses the mobile version of your website for indexing and ranking (known as “mobile-first indexing”). This means if your mobile site is missing content or is poorly optimized compared to your desktop site, your rankings could suffer, even for desktop searches. Your mobile site is your primary site in Google’s eyes.

Responsive Web Design

This is Google’s recommended approach. Responsive design means your website layout automatically adjusts to fit the screen size of any device (desktop, tablet, smartphone) using flexible grids and CSS media queries. It uses a single URL and codebase, making it easier to manage and ensuring content parity between mobile and desktop.

Testing for Mobile-Friendliness

Use Google’s Mobile-Friendly Test tool to quickly check if a specific URL meets Google’s criteria. Also, monitor the “Mobile Usability” report in Google Search Console for site-wide issues.

Site Architecture & URL Structure: Organizing Your Content

A logical site structure helps both users and search engines understand and navigate your website effectively.

Importance of a Logical Structure

A well-planned site architecture ensures:

- Easy Navigation: Users can find information with minimal clicks.

- Clear Hierarchy: Search engines understand the relationship between pages and the relative importance of content.

- Link Equity Flow: Internal links distribute authority (PageRank) throughout your site. Aim for a relatively “flat” architecture where important pages are only a few clicks away from the homepage. Use categories and subcategories logically.

SEO-Friendly URL Best Practices

Your URLs should be clear and informative:

- Keep them short and descriptive.

- Include relevant keywords (naturally).

- Use hyphens (-) to separate words (not underscores _ or spaces).

- Use lowercase letters.

- Good Example: https://www.yourdomain.com/technical-seo/site-speed-optimization

- Bad Example: https://www.yourdomain.com/index.php?cat=123&article=4567

Internal Linking

Strategically link relevant pages within your website. This helps users discover related content and helps search engines understand the context and importance of pages. Use descriptive anchor text for your internal links.

Breadcrumbs

Breadcrumb navigation (e.g., Home > Technical SEO > Site Speed) shows users their current location within the site’s hierarchy and provides easy one-click access back to parent pages. They are also beneficial for SEO website, helping Google understand site structure.

Structured Data (Schema Markup): Helping Search Engines Understand Context

Structured data uses vocabularies like Schema.org to provide additional context about your content. It’s typically implemented in formats like JSON-LD, helping search engines understand it more deeply.

What is Structured Data?

It’s code added to your HTML (often using the JSON-LD format) that labels content elements. For example, you can mark up a recipe with its ingredients, cooking time, and ratings, or a product page with its price, availability, and reviews.

Benefits for SEO

The primary benefit is enabling Rich Results (formerly Rich Snippets) in search results. These are visually enhanced listings that can include star ratings, images, FAQs, prices, event dates, etc., making your listing more prominent and potentially increasing click-through rates (CTR).

Common Schema Types

There are hundreds of types, but some common ones include:

- Article / NewsArticle / BlogPosting

- Product

- Recipe

- FAQPage

- HowTo

- LocalBusiness

- Event

- Review

Implementation & Testing

You can add schema markup manually, via CMS plugins (like Yoast SEO or Rank Math for WordPress), or using Google Tag Manager. Always validate your implementation using Google’s Rich Results Test tool and the Schema Markup Validator to ensure it’s correct and eligible for rich results.

Website Security: Building Trust

Website security is crucial for protecting user data and building trust.

HTTPS Importance

HTTPS (Hypertext Transfer Protocol Secure) encrypts the connection between a user’s browser and your website server. Google confirmed HTTPS as a lightweight ranking signal years ago. More importantly, browsers now flag non-HTTPS sites as “Not Secure,” which erodes user trust. It’s essential for any site, especially those handling sensitive information or transactions.

Implementing HTTPS Correctly

Obtain an SSL/TLS certificate (many hosting providers offer free certificates via Let’s Encrypt). Configure your server to use it, and implement permanent (301) redirects from all HTTP versions of your URLs to their corresponding HTTPS versions to consolidate signals and ensure users always land on the secure version.

Handling Duplicate Content

Duplicate Content

Duplicate content occurs when identical or substantially similar content appears on multiple URLs. Search engines can struggle to determine which version to index and rank.

Causes of Duplicate Content

Common technical causes include:

- www vs. non-www versions (http://domain.com vs. http://www.domain.com)

- HTTP vs. HTTPS versions

- URL parameters used for tracking or filtering (e.g., …?sessionid=123)

- Printer-friendly versions of pages

- Staging or development environments being indexed

- Content syndication without proper attribution (rel=canonical).

Solutions

- Canonical Tags (rel=”canonical”): The primary method. Specify the preferred URL for indexing.

- 301 Redirects: Permanently redirect duplicate URLs to the canonical version (e.g., redirect http:// to https:// and non-www to www, or vice-versa – be consistent).

- Parameter Handling: Configure URL parameter handling in Google Search Console (use with caution) or ensure parameters don’t create duplicate content issues.

- noindex tag: Use on versions you explicitly don’t want indexed (like printer-friendly pages).

Essential Technical SEO Tools & Auditing

To effectively manage technical SEO, you need the right tools and a process for auditing your site.

Must-Have Tools

- Google Search Console (GSC): Absolutely essential. Provides data directly from Google about how your site performs in search. Key reports for technical SEO include Index Coverage, Core Web Vitals, Mobile Usability, HTTPS status, Removals, Sitemaps, and Manual Actions.

- Google Analytics 4 (GA4): While primarily for tracking user behavior, GA4 insights can highlight potential UX issues related to technical problems (e.g., high bounce rates on slow mobile pages).

- Website Crawlers: Tools like Screaming Frog SEO Spider (desktop), Sitebulb (desktop), or cloud-based crawlers within platforms like Semrush or Ahrefs simulate search engine crawling. They help identify issues like broken links (404s), redirect chains, missing title tags/meta descriptions, duplicate content, indexation issues, and much more.

- Page Speed Testing Tools: Google PageSpeed Insights, GTmetrix, WebPageTest (as mentioned earlier).

- Rich Results Test & Schema Markup Validator: Google’s tools for validating your structured data implementation.

Conducting a Basic Technical SEO Audit

Conducting a Basic Technical SEO Audit

Regular technical audits are crucial for maintaining website health. A basic audit process might include:

- Crawl Your Website: Use a tool like Screaming Frog to identify crawl errors (404s, 5xx), redirect issues, title/meta tag problems, large images, and indexability status.

- Check Google Search Console: Review Coverage reports for indexing errors, check Mobile Usability, Core Web Vitals scores, HTTPS report, and look for any Manual Actions (penalties).

- Test Page Speed: Run key pages (homepage, main category pages, popular blog posts) through PageSpeed Insights.

- Check Mobile-Friendliness: Use the Mobile-Friendly Test tool.

- Verify HTTPS: Ensure your SSL certificate is valid and HTTP redirects correctly to HTTPS.

- Test Structured Data: Validate key pages with schema using the Rich Results Test.

- Review robots.txt and XML Sitemap: Ensure they are correctly configured and submitted.

Aim to perform checks regularly (monthly or quarterly, depending on site complexity and frequency of changes).

Conclusion

In conclusion, technical SEO is the foundation of a successful SEO strategy. Focus on crawlability, indexing, speed, mobile-friendliness, and security to improve rankings and user experience. Technical SEO might seem daunting, but it’s a vital component of any successful digital marketing strategy. It lays the groundwork for search engines to discover, understand, and reward your valuable content and ensures your visitors have a positive, seamless experience.

Key Takeaways

- Technical SEO focuses on optimizing website infrastructure for crawling, indexing, and rendering.

- It directly impacts search rankings and user experience.

- Core pillars include crawlability/indexability, site speed, mobile-friendliness, site architecture, structured data, and security.

- Regular auditing using tools like Google Search Console and website crawlers is essential.

Technical SEO is not a “set it and forget it” task. Search engine algorithms evolve, web technologies change, content gets added, and websites naturally degrade over time (link rot, outdated plugins, etc.). Continuous monitoring, regular audits, and ongoing optimization are necessary to maintain technical health and stay competitive.

Don’t let technical issues undermine your content creation and link-building efforts. By prioritizing the technical foundation of your website – ensuring it’s fast, accessible, secure, and easily understood by search engines – you create a robust platform for long-term organic growth and provide a superior experience for your audience. Start by auditing your site, address the most critical issues first, and make technical SEO an integral part of your ongoing website maintenance and marketing strategy.